Archival media remaster and artificial intelligence

Discover how artificial intelligence was used in the production to enhance storytelling and bring historical archives to life.

All remastered media are always shown alongside—or after—their original versions. For example, each 3D-remastered photo is presented next to the original photograph.

Storyboarding

Concept art and previsualization of the film

The director of the documentary used AI-assisted tools during the storyboarding phase to generate temporary visuals, schematics, visual references, and previsualizations based on the initial script. This process unfolded in two stages: first, the creation of still images; then, the refinement of angles, camera movements, and intended visual effects.

These tools allowed the team to experiment with various narrative structures early in the production process. By visualizing camera shots in advance, we were able to plan filming sessions more efficiently and with greater creative precision.

All AI-generated visuals were used solely as internal drafts to help the production team better understand the intended look and feel of the final documentary.

Model used:

ChatGPT 4o for Prompt Generation

ChatGPT for Image Generation

Luma Dream Machine for Image animation.

Stereoscopic conversion

Stereoscopic conversion is the process of transforming 2D media into 3D photography. It creates a “magic window” effect, allowing viewers to perceive depth and spatial relationships within an image. This is achieved by generating a distinct image for each eye. Traditionally, the process relied on time-consuming techniques such as manual rotoscoping and hand-painting.

In this production, compositing artists streamlined parts of the workflow using AI-assisted tools. Neural networks were employed to isolate individual elements and generate depth maps, estimating depth both locally (per object) and globally (across the scene) from a single image.

While these tools significantly accelerated the process, artist supervision remained essential. Manual adjustments were necessary to ensure the final 3D result felt natural and visually coherent, as automated depth estimation is not yet pixel-perfect.

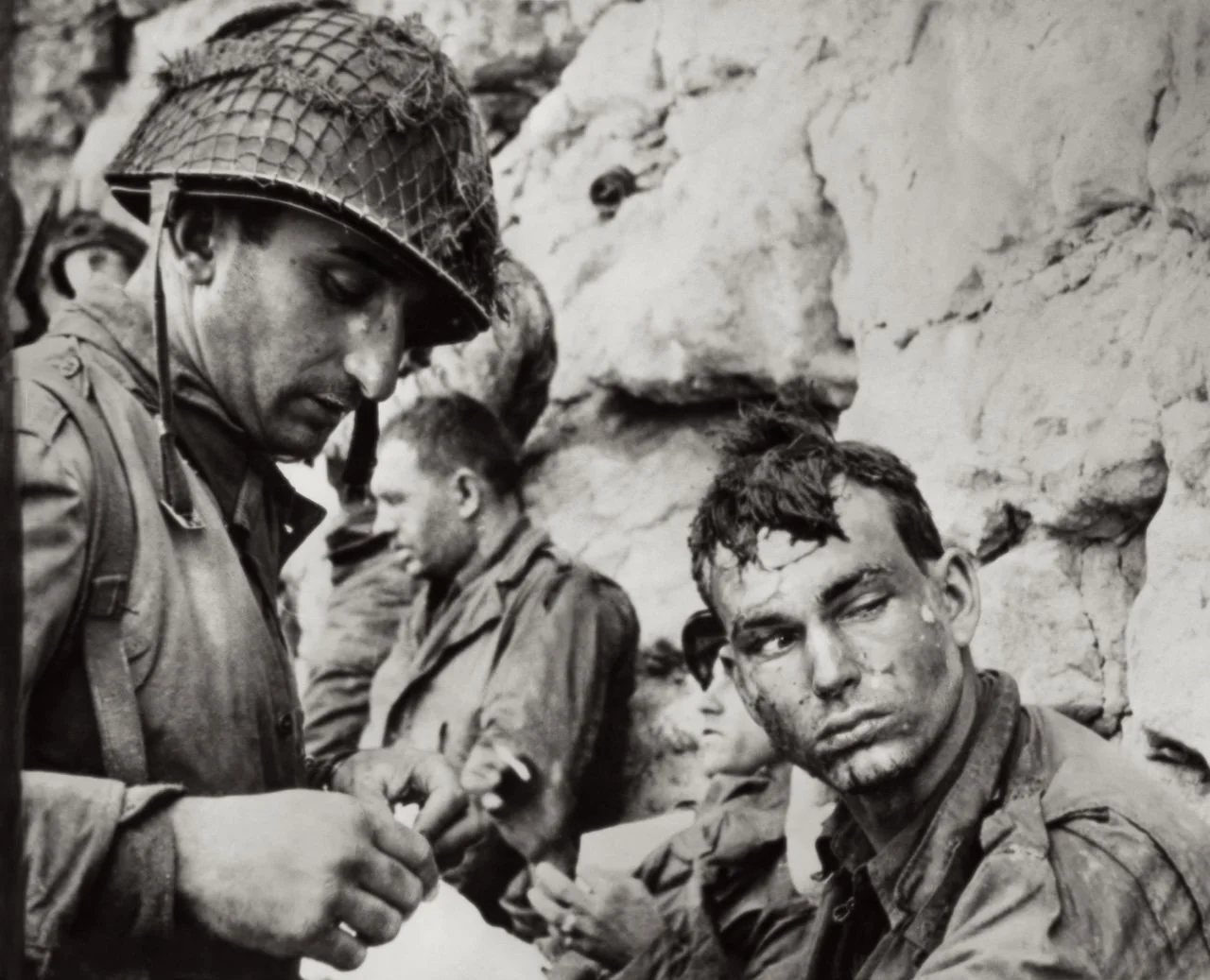

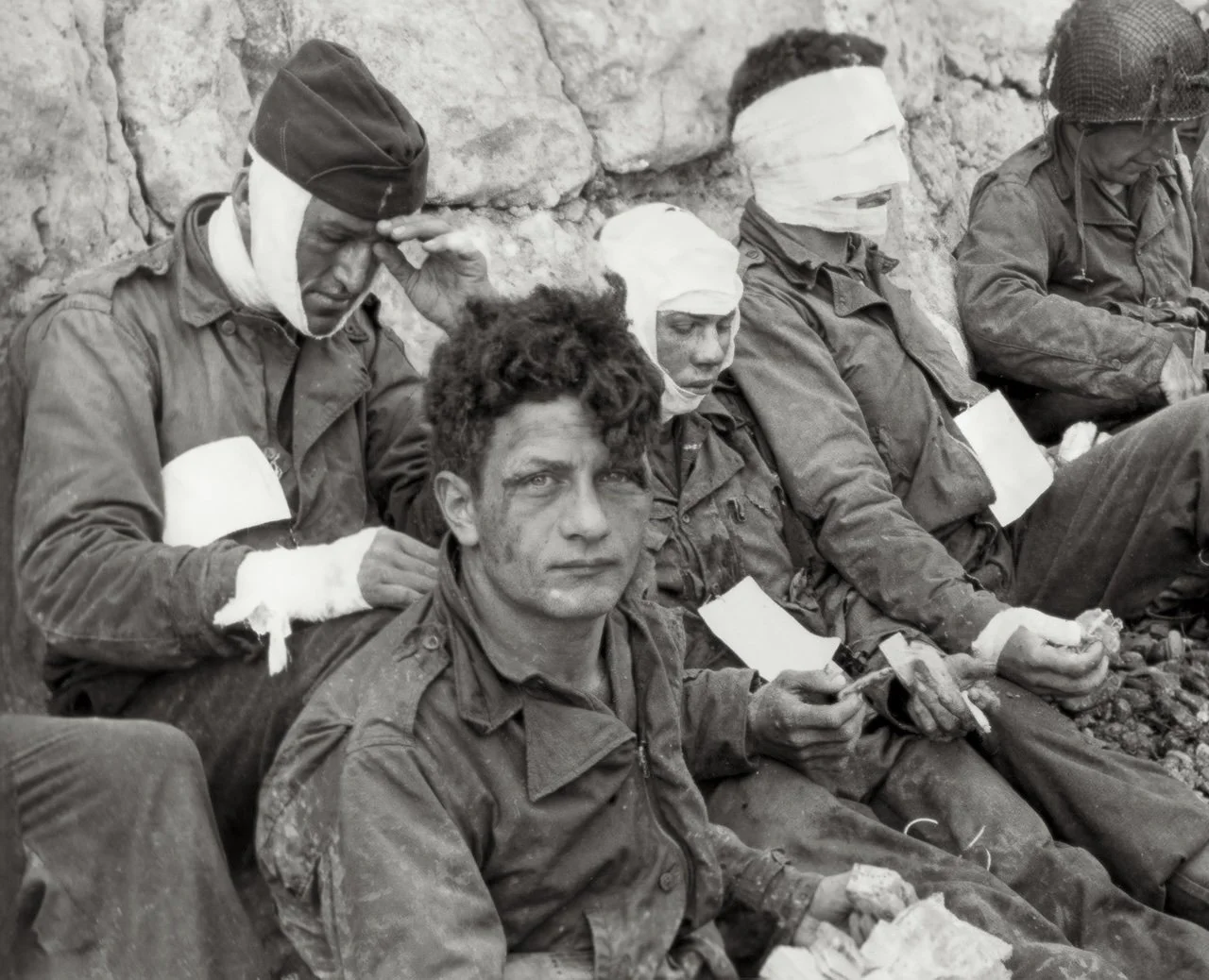

Select archival photographs were transformed into spatial 3D images by the compositing team, adding depth and emotional resonance to the documentary.

This technology was used on the following media visible in the documentary

The seven photos in the interactive photo album

The two photographs of Richard Taylor holding his camera

Four final pictures taken by Richard Taylor on Omaha Beach

Model used:

Adobe Photoshop Neural Filters

ImmersityAI 4.0 Neural Rendering

Nuke Cattery

Apple Vision Pro native stereoscopic conversion tool

Cleanup

Archival footage often contains scratches and film noise that can obscure visual details. Our VFX team employed algorithm-based denoising techniques to reduce these imperfections, enhancing clarity for modern audiences—while carefully preserving the footage’s historical authenticity.

This technology was used on the following media:

The introductory archive footage from NARA

Richard Taylor’s footage visible in the viewfinder

Model used:

Neat Video

3D re-texturing

A key element of the documentary is the recreation of archival photographs as fully volumetric scenes. In this process, our team used AI only at the final stage of the pipeline.

The 3D graphics team began with original photographs, modeling the underlying geometry of each scene based on historical references.

For example, we scanned an authentic Higgins boat—the iconic landing craft used during D-Day—to accurately reproduce scale and colors. Textures from archival photos were then mapped onto the models to build a historically faithful base. These were later replaced with higher-resolution textures captured from museums (including uniforms, accessories, and natural elements).

AI was used in the final step to enhance photorealism and achieve visual consistency across the scene. The AI-generated textures added subtle detail, which we manually refined—where relevant and historically appropriate—to ensure seamless integration with real-world elements.

The result: lifelike reconstructions that respect the structure of the original photographs while immersing viewers in the recreated moments of history.

This technology was used on the following media:

3D CGI scene of soldiers leaving the landing craft

3D CGI scene when landing on Omaha Beach

3D CGI scene with soldiers running on Omaha Beach

Model used:

MagnificAI

ChatGPT 4o image generator